I Am Lean QA (and You Can Too)

Greetings! I’m Aparna Bankston, one of the newest members of the JSTOR Labs team here in Ann Arbor, Michigan, and I’m your resident Quality Assurance (QA) Engineer, aka your “Guardian of Excellence!” Today, I’m going to share with you what it’s like to play this role on a team the works in the lean, agile way that JSTOR Labs does.

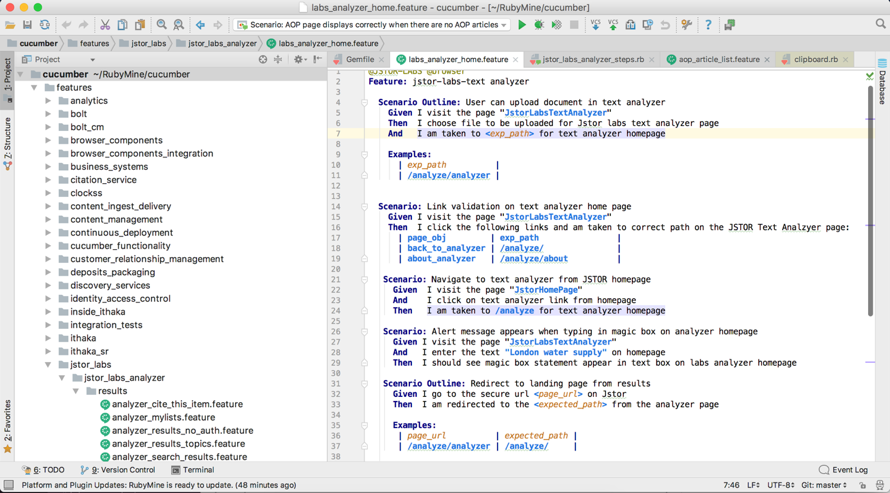

To do that, let’s first back up a second to talk about the role of the testing engineer on a team. When I first started in QA, we tested everything manually, going through a set of steps to test what the new code does to make sure that it did what the product managers and developers said it should. In recent years, we’ve automated much if not all of this testing. We write testing scripts and scenarios that test the different anticipated user workflows (the screenshot below shows an example of these scripts). Those tests are set up in a test suite which runs every time code is deployed, and tells developers whether or not their new code breaks something. This allows developers to get instant feedback on their work. It also saves me time, allowing me to move up in the process and work alongside the developer, thus getting tests in place earlier in the process.

But here’s the catch. In order to decide whether a new line of code is working, you need to know what it should be doing. That’s pretty easy on a fairly typical development team, where the team understands the product and works against a well-defined backlog of work. But JSTOR Labs doesn’t work that way. The Labs team runs constant experiments and tests in an effort to figure out how a product or feature should work – we don’t know it in advance because we’re working in uncharted territory. When we’re working on a project, the technology and interaction design are constantly changing as the team iterates towards a design that’s useful and useable to scholars and students. That rapid change makes it tough to figure out when to write automation to ensure quality is in place.

The question is no longer “can it be automated?”, but, "should it be automated and when?”

A great example of this is with the release of JSTOR Labs’ newest tool, Text Analyzer. When I joined the team, I worked with the team to figure out what functionality was less likely to change. A team like Labs is always learning, and part of that learning is understanding what is working. We quickly identified some core functionality, and I created automation tests of that. Those tests turned into a basic regression suite that run regularly and I monitor. This is important because as new developments are released, we want to ensure that core functionality continues to work. A team like Labs likes to “move fast and break things.” The work that I do helps the team move fast by helping it know what it’s breaking, when.