Rembrandt Project Image Matching

In the Exploring Rembrandt project conducted earlier this year by the JSTOR Labs and Artstor Labs teams we looked at the feasibility of using image analysis to match images in the respective repositories. The JSTOR corpus contains more than 8 million embedded images and the Artstor Digital Library has more than 2 million high quality images. There is unquestionably a significant number of works in common between these image sets, especially in articles from the JSTOR Art and Art History disciplines. Matching these images manually would be impractical so we needed to determine whether this could be done using automation.

A key element that we wanted to incorporate into the Exploring Rembrandt prototype was the linking of images in JSTOR articles to a high-resolution counterpart in Artstor where these existed. This would allow a user to click on an image or link in a JSTOR search result and invoke the Artstor image viewer on the corresponding image in Artstor. For instance, when selecting the Night Watch in the Exploring Rembrandt prototype a list of JSTOR articles associated with the Night Watch is displayed and if the article includes an embedded image of the painting it is linked to the version in Artstor as can be seen here.

To accomplish this we needed to generate bi-directional links for the 5 Rembrandt works selected.

For the image matching we first identified a set of candidate images (query set) in JSTOR by searching for images in articles in which the text ‘Rembrandt’ occurred in either the article title or in an image caption. This yielded approximately 9,000 images. Similarly in Artstor, 420 candidate images associated with Rembrandt were identified and an image set was created for them. JSTOR images were compared against Artstor images. For this we used OpenCV, an open source image-processing library supporting a wide range of uses and programming languages and operating systems.

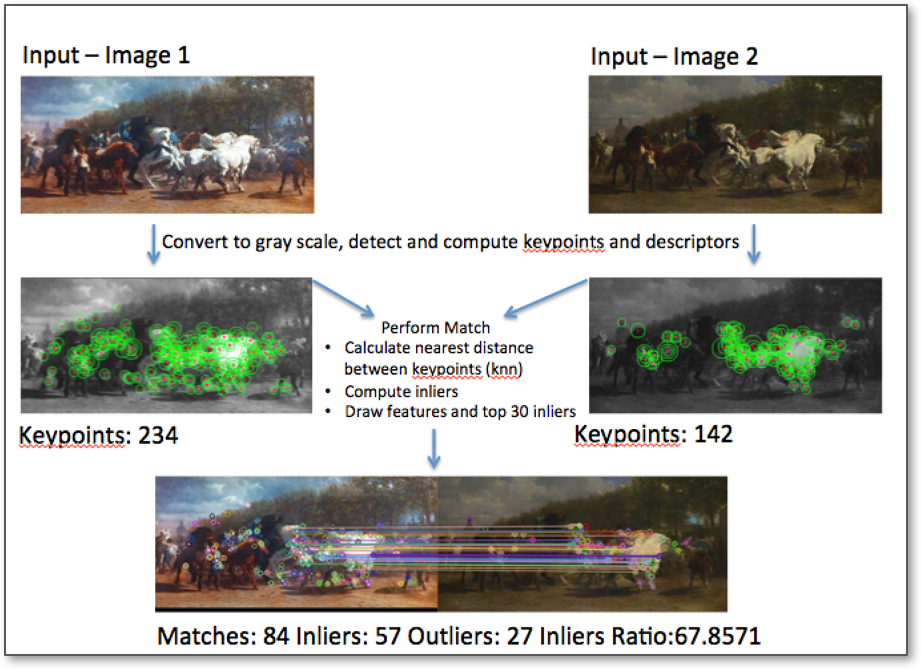

For the matching of images we designed a pipeline process as illustrated below.

Artstor images were the training set and JSTOR images were the query set.

The first phase ensures that the training and query sets are similar in colorspace and longside. The images are resized to 300px longside, converted to grayscale and keypoints and descriptors are extracted. Images that do not have more than 20 keypoints are discarded, since they end up creating excess false positives. The 300px size and 20 keypoints attributes were determined through experimentation and reflects a sweet spot for accuracy and computational efficiency in our process.

The second phase performs the matching. The process utilizes AKAZE algorithm; efficient for matching images that contain rich features. The generated keypoints between two images are compared and the nearest distance between each of keypoints is measured for similarity or likeness.

The final phase collects the data and exports the result as a csv file.

The tuning parameters for Rembrandt set:

- Max long side 300px

- Keypoints > 20,

- Matches > 30

- Inliers > outliers.

This image matching process identified 431 images from the 9,000 JSTOR candidates with probable matches to one or more of the 420 Artstor images. These 431 images were then incorporated into the Exploring Rembrandt prototype.

While the number of JSTOR and Artstor images used in this proof of concept was relatively small, 9,000 and 420, respectively, the technical feasibility of automatically matching images was validated.

The main lesson learned was finding the tuning parameters for matching between two vastly different types of corpuses. Further work should apply the same tuning parameters, visualize the results and build up from there.

Future work will include further experimentation and tuning of the myriad of parameters used by OpenCV and the scaling of the processing enabling this to be performed on much larger image sets, eventually encompassing the complete 10 plus images in the two repositories.