Initial User Research

This is the third in a series of blog posts about the process JSTOR Labs uses for its Discovery Projects. You can read the introductory post about the process here, and learn about discovery projects here.

When JSTOR Labs starts a project, our first job once we have defined what we want to do on the project, is to explore the territory we have defined. Usually, that territory consists of people -- most often, students, researchers, and teachers -- who are currently some kind of research. These are the target users we want to help with the project, but we can’t figure out how we might help them without first understanding who they are and what they do currently.

This research on the target users can take a few forms. When we have a large project and enough time, we will do an ethnographic study to learn how they do their work. In a lean world, particularly with discovery projects, we will conduct interviews with a handful of users from the target discipline or special use that we’re seeking. This is the process we use for this user research:

Step 1: Define who the target user is.

Usually for us, this has been done as part of setting up (aka creating the sandbox for) a project. This target user should be specific enough to provide focus but not so specific that it’s limited to, like, three people.

Step 2: Recruit users to interview.

Sometimes recruiting people to interview is easy! If we're looking for history undergrads for example, all we need to do is post a flyer at a local university. Sometimes it's a lot harder to find the esoteric type of scholarship that we're looking for, and we may need to reach out to our personal networks, post a recruitment survey via JSTOR social media, or find a scholarly society who works in that area and ask for their contacts. Regardless, ideally we’re getting as diverse a group of users within our target category as possible.

Step 3. Prepare for the interviews.

Once we have recruited appropriate scholars to interview, we schedule an hour-long session with them. If we have a pre-existing relationship with them or their seniority would make an honorarium inappropriate, we send some JSTOR goodies (like a shirt, notebook, or tote bag) with a handwritten thank you note. Most of the time, though, we offer Amazon gift cards in appreciation for the interviewee’s time.

I write a protocol for each set of interviews, and while it varies for each discipline/problem, there are a few consistent pieces. At a high level, I want to know: - Demographics, to gauge how squarely they fit into the audience we’re seeking. - A description of their work in their own words. We need to understand their current process to work with it rather than against it. - The problems they are facing in research. Sometimes this comes across naturally when they describe their work and sometimes it requires more probing questions. Solving someone’s problem is often the best way to guarantee you’ve made useful software. - What they imagine as the ideal solution to their problem. We often ask the question “If you had a magic wand, and there were no technical limitations, how would this work?”

Here is an abstracted starting point for the interview format; these questions are relevant for almost every person we talk to. Of course, depending on the discipline or problem space, it’s customized and questions are added.

You’ve worked on _____________ and taught ____________. What am I missing?When did you get started in the field?From a big picture and higher goals perspective, why are you in this field?What is your current research project?

Notes: I do some background research on them and, if possible, know the answers to most of these questions going into the interview. I share my understanding, and they can correct or add to that information. Ideally, it gives the interviewee a sense of how valuable and important they are to us, like how one would research a company before interviewing there. We’re not wasting their time asking factual questions that are easy to find the answer to; we want their insight and reflections instead.

Which resources do you use for research?How do you identify your research questions?To what extent do you rely on primary vs. secondary sources? Where do you start?Where do you find your primary sources and which types do you use?Where do you find your secondary sources?What tools do you use to accomplish [task]? What do they get right? What do you wish worked better?[If teaching] What’s the hardest part about teaching this subject in particular?For students, what’s the hardest part about learning it?

Notes: these questions are more, shall we say, low level and practical than some scholars are used to. Some are enthusiastic to share thoughts about paradigms of consciousness and subdialectic structuralism in Rushdie, when we really just want to know whether they save their bibliography in Word or Zotero. ¯\(ツ)/¯ Interviews may veer off course in this way, and we have to try to steer them back. It’s all to be expected, because this is a conversational, semi-structured discussion.

If you could fix [process/problem] with a magic wand, what would that look like?

Notes: Some answers are more pragmatic than magical (“I wish text OCR’ing had higher accuracy so I could search with more confidence”) while others truly are in the realm of the supernatural (“I wish I had a time machine to take my history students back, so they could really understand the time period”). Either way, this illustrates their deepest pain point and the reasons it’s important.

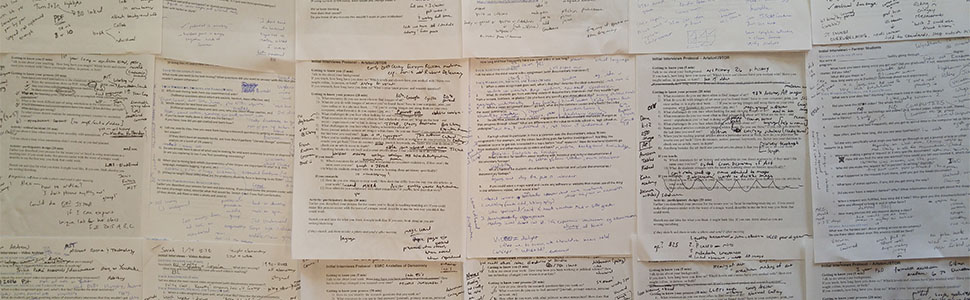

Step 4. Conduct the interviews.

Before an interview, we’ll designate a notetaker because it’s difficult to ask the pre-planned questions, follow scholar’s narratives, think on your feet to ask meaningful followup questions AND write all of that down. In my opinion it’s better for the notetaker to err on the side of verbosity, because we don’t know at the beginning of a project what all the important bits of information are. In formulating followup questions, the Master-Apprentice Model is a helpful mental framework, since we want to learn how researchers of various types do their work.

For video call interviews we minimize the number visible observers by doing things like having two listeners in the NY office call together and having Ann Arbor observers seated out of camera view, but it’s also not a secret that there are observers. I let interviewees know that there are team members listening and taking notes who may join in with questions at the end.

When I’m leading the interviews, it’s important to keep the tone both friendly and respectful -- the interviewees shouldn’t feel grilled, or like we’re reading off a litany of questions. Instead, the interview should feel conversational, which helps them to relax and open up. Often, interviewees remark afterward what a pleasure the conversation was, since they seldom take the time to interrogate their own processes and our questions helped them do so.

At the end of an interview, we send a gift card and/or thank you note and do a quick evaluation of what was or wasn’t effective/interesting in the interview. The protocol isn’t sacred, and if a few interviews show a question that doesn’t make sense or isn’t helpful, we can take it out for future interviews.

Step 5. Analysis

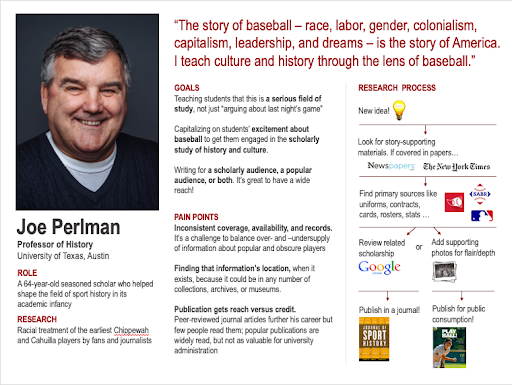

When all the interviews are finished, I synthesize the findings so they can be shared among the team. This often takes the form of a persona, but might also be a presentation or other document. Here are a few example personas, fictional aggregations of people that we can refer back to (e.g. “How can we solve Mary’s problems?” or “That solution won’t work for Juan”) that keep user needs and habits at the forefront:

When I look back at all the groups we’ve done this type of research with, there’s quite a list that comes to mind, and I’m sure I’ve forgotten a few, but we have spoken to scholars of: critical plant studies, Shakespeare, civil rights, art history, democracy, the U.S. Constitution, Dante, public health, literature, the U.S. slave trade, history, sustainability, and baseball. We’ve spoken to teachers and researchers who use: video, annotations, text mining, smartphones, monographs, maps, and critical editions.

I love the variety of people we talk to, and though we’ll never understand every aspect of the scholarly process, we’ll keep learning, one discipline or tool at a time.